Optimizing Web Content

The Request is Evil, Stamp it Out Where Possible

I’ve blogged previously about how we build fast web servers for hosting GeoNet content. If you care about a good user experience then you should care about having a fast web site and that means you should care about optimizing the content you serve as well as optimizing the web servers.

When you surf the web your browser requests objects (html, css, js, images, etc) from a web server, downloads them and uses those objects to render the web page that you view. The number of requests and the size of the objects all contribute to the so called page weight. A weighty page is a slow page and can make for a bad browsing experience for your users.

As a quick aside; give the web developers a fighting chance and use a modern browser. If you’re not using one then stop reading this and go install one now. Now! Go!

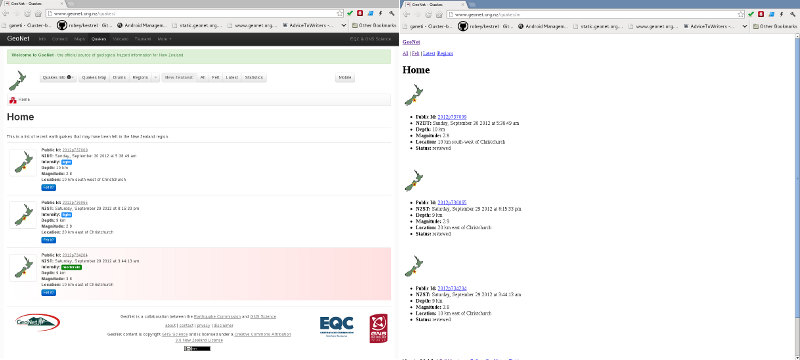

Consider the two web pages shown below. They both have exactly the same information content – the location of three earthquakes. The one on the left uses 9 requests and a download size of 92.4KB, the one on the right only needs 3 requests and a download size 5.91KB. This is the sort of difference that can have a huge impact when your web servers are running at over 16,000 requests a second.

Now I’m not suggesting that we have no style or design on the internet (although you could probably run the entire web on an small fraction of the current number of servers and the CO2 emissions savings would be immense) but I am suggesting that you should strive to get your information density in terms of request and download size as high as possible.

Optimizing Content

The fastest way to improve your web content performance is to become a Steve Souders devotee. He literally wrote the book on web performance followed by another one. While you read his writings, or use any of the many open source tools he has been involved with, tip your hat to his current employer Google and his former employer Yahoo for supporting all of his work to improve the internet. If you work on web content and you haven’t heard of Steve Souders then put down your triple latte and turn off that round cornered mp3 player - you have important things to learn!

There are a couple of browser extensions that condense all of the rules and performance knowledge into simple to use tools – have a look at either Yslow or PageSpeed. The Google Chrome Developer Tools are also very useful.

The Request Is Evil

The greatest killer to a fast loading web site is the request and many web optimization techniques are about reducing the number of requests your browser needs to make to render a page. A request is made every time the browser has to fetch an object to display a web page. Making a request requires a tcp connection to the web server and they are expensive to set up and tear down. Browsers can make multiple requests (usually 6 to 8 depending on the browser) per connection to the server before the connection must be closed and re-established. This all takes time and on a slow connection it can take a lot of time. In fact, depending on network speed, requests can have a much higher cost than large objects like images.

So if you want to make your web content faster the first thing to get a handle on is the number of requests involved. Then start stamping out requests where ever you can. Reducing the number of requests is the quickest way to make your pages load faster.

So take a look at the number of requests needed to load your web pages or ours (use the developer tools in Google Chrome) and with an empty cache (or forcing a refresh) load the page and look at the network tab. Check GeoNet’s content (9-10 requests for the home page), check some large online media sites (renowned criminals for slow pages). You may be shocked at the number of requests to load some of those pages - over 200?! Oh my, that’s really going to stuff my web browsing experience.

Also, every single request requires web server resources to serve it. The more requests for your content, the bigger the hammer you’re going to need on the server. This means that optimizing your web content can result in direct cost savings in the data centre.

Making it Better

To improve the performance of GeoNet web content we use many of the approaches advocated by Steve Souders. I’ll cover the important ones here.

CSS Sprites

Use CSS sprites to combine images. Here’s our sprite for the common logos and icons. Loading those images separately is 8 requests. Combined it is 1. For the GeoNet home page 5 of those images are used for the cost of only 1 request. That’s a savings of 4 requests compared to loading them individually – instant win. The cost of having a slightly larger image than we need for the home page (the extra 3 images that aren’t used on the home) is more than covered by the reduction of the number of requests. But don’t take my or anyone else’s word for it, go dummy up some pages and investigate the size versus request trade off using Hammerhead for yourself.

Combine and Minify CSS and JavaScript

This reduces requests and also download size. We use Yuicompressor. There are other options out there. It can be hard to get this perfect across an entire site when different pages need different CSS and JavaScript.

And here’s a hint: Adding ‘min’ to your file names to show that you have minified them adds a completely unnecessary step to deployment (changing your script or CSS names in the pages). Keep the same name and minify the files as part of deployment process or possibly even on the fly as part of serving them.

Compress Images

Use a tool like pngcrush or an online service to compress images. The reductions, even with no loss of quality, can be significant.

Set Far Future Expires Times on Content

The longer an expires time on an object is, the more likely it will still be in the browsers cache next time it is needed, which can mean no request to the server is needed at all. The expires time is a web server configuration problem that is made easier if you separate your content into directories organised by how often it needs to be updated (e.g., images versus icons).

Use Domain Sharding

A browser can make multiple requests (usually 6 to 8) per connection to a server. It can also make multiple parallel connections to different domains. You can trick a browser into making multiple parallel connections to the same domain using a technique called domain sharding. Look at where all the images on a page like All Quakes come from and you will see that there are up to 6 server names (static1.geonet.org.nz, static2 etc.) involved in serving the small icon maps that show the quake location. All of those server names point to the same server. This is domain sharding. Conventional wisdom used to say 6 was the best number. There is currently some debate about this number and it is being revised down. We may need to reduce the number of server names involved in serving GeoNet content.

Use Gzip Compression

Another web server technique to reduce the size of downloads on the wire. Only two thirds of compressible material is actually compressed. Roll your eyes and say lhaaaazy (then hurry off and check your settings).

Conclusions

Web performance is a complex topic. Optimizing content can be tricky and due to ever changing browsers and techniques it can be hard to stay up to date (while writing this I’ve noted several things that we can improve). The best bet is to read one of the many excellent online resources e.g., https://developers.google.com/speed/ and keep up with Steve Souders (if you can).

When you combine web content optimization with server optimization the difference between having a web site and having a fast web site can be an immense amount of work. But don’t be put off, start now and make any improvement you can. Combine two images, stamp out one request and give yourself a pat on the back. Your users will thank you for it.