GeoNet Web Hosting

16,257 Requests per Second. That’s Apache httpd Serving Y’all

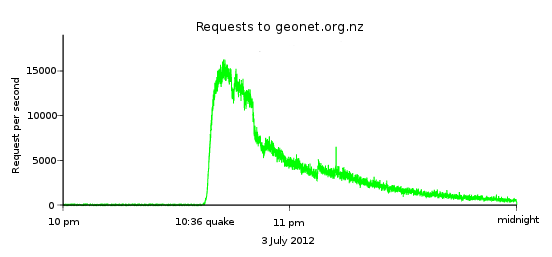

On July 3 2012 a deep magnitude 6.5 earthquake near Opunake was felt widely across New Zealand. Traffic to the GeoNet website topped out at 16,257 requests per second - this was only six minutes after the earthquake. It’s this very fast rise, from the regular background traffic of a few requests a second to peaks like we just experienced, that makes our web hosting challenging. Our normal web traffic looks like a well organized denial of service attack.

The image above shows the pattern of traffic to the GeoNet website www.geonet.org.nz before and after the Opunake earthquake. Traffic rises from the background of a few requests a second to 16,257 requests a second in six minutes.

To serve that traffic we use the venerable open source web server Apache httpd. We are not alone: since April 1996 httpd has been the most popular web server in the internet.

Making Apache httpd Fast

So it may be a surprise that we use Apache httpd as there is an assumption that it is a heavy server and that there are faster alternatives. That may be true (see ‘That Question’ below). This isn’t a web server shoot out – it’s about how we tuned httpd. The tuning approach applies to any web server.

The Apache httpd docs are excellent, and if you care about performance you should read at least the sections on performance tuning and multi-processor modules.

Optimizing web server performance is simple, right? Just tweak your configuration to maximise the use of your hardware and the result is the number of concurrent connections you can handle. Here’s how we did it:

Our Servers and Content

Servers: Cheap, nothing fancy. Quad processor, dual core, 8 GB ram, running linux. You can probably make an effective web server using other operating systems, just like you can probably win Bathurst using antiquated V8 technology, but you might need to ban the more efficient competitors first.

Hard drives: Dunno, who cares? Something fast I guess, at least probably not a tape drive, definitely not SSD. Modern linux kernels use memory for disk caching to vastly speed up file access.

Virtual machines: Nope, not for this job. Instant performance loss even with the good virtualisation software.

We run six web servers in New Zealand largely for reasons of geographic redundancy and up time. They are separated into two Content Delivery Networks (CDNs). More on that in a future post.

The content being served here is static pages on disk – the ultimate cache. More on caching and dynamic content in a future post.

The Golden Rule: Swapping is Death

RAM used to be hugely expensive. Swapping allows a server to temporarily use disk in place of memory, and therefore fake having more RAM than you could afford – the only downside being a HUGE performance loss. Hard drive access speed is much much slower than RAM so if your process (say httpd) is being routinely swapped to disk it will slow to a crawl. Swapping is web server death.

To make a fast web server you want to tune things to get as many processes as possible to fit into the RAM available on the hardware. The objective becomes to get the Resident Set Size (RSS - the part of the web server process that is held in RAM) as small as possible. The smaller the RSS, the more processes the server can run and the more connections you can handle.

Apache httpd – All Things to All People

Apache httpd is a very flexible web server. Between its core functionality and additional modules httpd can be used to solve a wide range of web hosting problems. The rich functionality comes at a cost: a larger RSS. If you use a package installed httpd you may well get a lot of features you don’t need. They are often provided as shared objects that can be dynamically loaded as required, but even that functionality adds to the RSS by having to enable the shared object module.

Apache httpd provides a number of different multi-processor modules (MPMs) that control how it handles requests for content. Choosing the correct one for your requirements can have a huge impact on performance. The default configuration choices tend to be conservative and are intended to provide the widest range of compatibility with possibly old software. The default choice for Unix systems is prefork, but the docs suggest this may not be the best choice for highly loaded systems. Don’t trust me – go read the docs now. The docs also have this nugget:

“…Compilers are capable of optimizing a lot of functions if threads are used, but only if they know that threads are being used…”

Oh my, compilation… Fear not, it’s easy as long as you don’t peek under the covers.

Compiling and Tuning Apache.

There are two tasks: chose a suitable MPM and get the RSS size down so we can run lots of them. We use the worker MPM. If you need to run other modules or server side scripting, you may have to use the prefork worker.

We then look at what functionality we actually need Apache httpd to have and compile out everything we don’t need:

./configure \

--prefix=/usr/local/apache \

--with-mpm=worker \

--enable-alias \

--enable-expires \

--enable-logio \

--enable-rewrite \

--enable-deflate \

...

--disable-authn \

--disable-authz \

--disable-auth \

--disable-dbd \

--disable-ext \

--disable-include \

...

make

make install

Follow this process and you will build an Apache httpd that will have the smallest RSS for your requirements.

Now just figure out how many process you can run in available RAM:

Find the total memory available:

cat /proc/meminfo | grep MemTotal

Stop httpd and figure out how much memory everything else running on the system is using:

ps aux | awk '{rss+=$6} END {print "rss =",rss/1000 "MB"}'

Subtract this from your total memory. Ponder the size of your content and how much memory caching you will use and subtract a suitable number from the memory left. This will be the total memory of RAM that you will let httpd use.

Fire up httpd and put it under load – you choose the tool. Httperf is good. Then look at the httpd RSS set size:

ps -aylC httpd | grep httpd | awk '{httpdrss+=$8} END {print "rss =",httpdrss/NR/1024 "MB"}'

rss = 10.826MB

For our servers a calculator says we should be able to run 636 server processes each of which run 50 threads per child worker, so 31800 workers. Under test conditions httpd proved be stable with this number of workers. For production we decided to be a little conservative and allow more memory overhead for other processes.

Once you’ve come up with your number of workers then start them all at httpd start up. When our web servers come under load the rise in traffic is near vertical and the last thing we want httpd doing is spending time starting more threads when it should be serving content. Our worker configuration is then:

<IfModule mpm_worker_module>

StartServers 400

ServerLimit 400

MaxClients 20000

MinSpareThreads 1

MaxSpareThreads 20000

ThreadsPerChild 50

MaxRequestsPerChild 0

</IfModule>

Firewall and Kernel

Finally, you may need to tune you firewall and kernel. Disable conntrack for http (port 80) connections:

...

-A PREROUTING -p tcp -m tcp --dport 80 -j NOTRACK

-A OUTPUT -p tcp -m tcp --sport 80 -j NOTRACK

...

-A INPUT -p tcp -m tcp --dport 80 -j ACCEPT

...

And increase the size of the TCP backlog queue:

tail -n 1 /etc/sysctl.conf

-> net.ipv4.tcp_max_syn_backlog = 4096

Conclusions

Configured like this Apache httpd is a very fast and stable web server. It’s served us, and you, very well.

Interestingly, optimizing web content for performance is at least as important as optimizing web server performance but often far more subjective because it introduces nebulous concepts like look and style. More on that in the future.

That Question

Inevitably someone asks, “Why don’t you use Nginx? It’s faster.” I have no idea if Nginx would be faster for our use. A cursory glance at many of the comparisons available on the web show that they are not comparing like functionality – installing two web server packages with the default configurations and then comparing them without tuning proves nothing. A meaningful test has to be for the same functionality and needs to try to exhaust the critical hardware resources.

I recently saw an interesting presentation by Jim Jagielski that discussed new features in Apache httpd 2.4 and made some comparisons to Nginx. Nginx just wins out in some situations but not all. People will quite rightly point out that Jim Jagielski is the co-founder of the Apache Software Foundation and probably unfairly imply a vested interest in the comparisons. The only certainty is that the competition will be good for all users of the internet. The debate and unfounded proclamations will continue.

Anyway, as of last week I don’t care. We’ve switched www.geonet.org.nz to Varnish.